In the recent years, and more so since the introduction of OpenAI’s Dall-E and ChatGPT, the terms “artificial intelligence” (AI) and “machine learning” (ML) have become commonplace. What was considered as science fiction for many years is slowly becoming a reality, and in fact, we are surrounded by artificial intelligence almost every moment of our daily life. Our Facebook browsing and Google searches, the algorithm that suggests us new shows on Netflix and unfamiliar songs on Spotify, and even the autonomous driving capabilities of modern vehicles – all are examples of artificial intelligence trained to perform various technical tasks, to make our day-to-day activities easier, to make our experience across the various services smoother, and to simplify technical and repetitive work for human operators.

Over the past twenty years, numerous start-up companies around the world have been focusing on AI-based medical and dental solutions. What began as rather modest attempts to build natural language processing engines that enable computerized reading of medical records and systematic extraction of information from them, has in recent years become a real revolution, especially in the field of medical image recognition. Dozens, if not hundreds of companies around the world are competing for the attention of imaging departments in various hospitals and offering solutions that might help in easing the workload of the radiologist, prioritize patient cases, and alleviate the associated professional burnout through AI-based identification of various pathological conditions.

Here, however, the field of general radiology encountered a problem. Since “narrow” AI is usually trained to recognize one specific thing, each AI model is capable of addressing only one type of lesion or disease. In order to provide a comprehensive AI-based solution to a hospital imaging department, one must acquire and integrate many software solutions, each from a different manufacturer. This task remains impossible to this day.

Luckily for us, the creation of a comprehensive diagnostic tool is much easier when it comes to dental medicine. Most patients who come in for a routine dental checkup would present a fairly limited variety of clinical conditions – mainly periodontal attachment loss and carious lesions, together with various endodontic and periapical conditions. From a purely statistical point of view, it can be said that these diagnoses are at the core of dentistry, and therefore it should be rather easy to create an AI-based diagnostic system that would cover more than 90% of the patients seen by a general dental practitioner in a community setting.

While the reasonable dentist must spend some valuable time reviewing the X-rays or CBCT and looking for abnormal findings, a computerized system can analyze it in a matter of minutes, and with a high average accuracy – highlighting hard-to-identify radiologic findings that the doctor may have missed. Unlike a human doctor, computerized systems do not get tired and their ability to concentrate on a specific case is not impaired throughout the working day.

Indeed, several AI-based dental imaging solutions reviewing dental x-rays and producing reports have appeared recently. Most of these software solutions are based on image recognition models, and therefore specialize in 2D imaging – namely, bitewings, periapical and panoramic (OPG) X-rays. Fewer advanced solutions exist that are based on convolutional neural networks used for 3D volumes, which are therefore also suitable for interpreting dental Cone Beam CT.

The use of CBCT in dentistry has become commonplace in recent years. While technical capabilities of CBCT devices are constantly improving together with a gradual decrease in radiation exposure, interpretation methods and viewers have also improved. Nevertheless, for many dentists, interpretation of a 3D CBCT volume is still a challenge, or even a disruption of the daily routine. The need for additional training and a considerable amount of time for interpretation of a CBCT, cause a large share of the information to be lost, minimizing its diagnostic benefits.

AI-based innovative decision support systems have entered this space. These systems analyze the simulations and photographs, look for abnormal findings – depending on what the system has been trained to identify – and highlight them to the dental practitioner, saving time and preventing underdiagnosis.

Generally speaking, one may divide the AI tools currently available in the dental market to three different types:

- Review of findings – identification and numbering of teeth in the 2D X-ray or 3D CBCT imaging; identification of basic dental anatomy (roots and canals); identification of previous treatments performed on the teeth and related defects; identification of dental and gingival lesions; and identification of lesions and abnormal findings in the jaws and maxillary sinuses.

- Automated measuring tools – tools that provide alveolar bone measurement for dental implant placement; measurement of root canals for endodontic treatment; measurement of the volume of periapical lesions for clinical decision-making and follow-up; cephalometric measurements; and measurement of the volume of the upper airway (for orthodontic treatment, as well as detection of sleep apnea).

- Segmentation tools – convert the 3D CBCT volume into digital models, which can then be transferred to specialized software for orthodontic, prosthodontic, or surgical planning. The use of CBCT as an addition to intraoral scans and facial scans is another step towards creating a more complete “virtual patient”, on which we can plan the treatment more accurately, later transferring the products of our planning to computerized production, and finally, to placement in the patient’s mouth.

I have been using artificial intelligence tools throughout the examination of all my patients since 2019. I will now present a number of clinical cases from my daily practice, in which artificial intelligence was used as an aid for making informed decisions in diagnosis and treatment planning. It must be said that I deliberately chose routine cases and not “heroic battle tales”. In my presentation below, I will focus on the process of examination and decision-making and not necessarily on the treatment itself.

Clinical Case No. 1

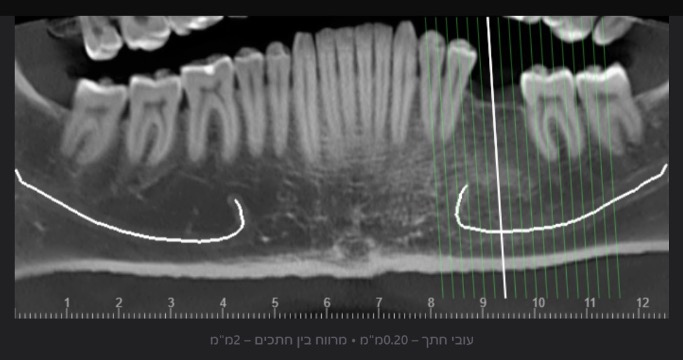

R., a 40-year-old male, came to the dental clinic due to a previously extracted tooth #19 (ISO 36), and asked for an implant-supported crown to be placed. He was referred for a CBCT at an imaging center, and the resulting 3D volume was uploaded to an artificial intelligence system (Diagnocat, Diagnocat Inc., USA) to perform automatic slicing, detection of the inferior alveolar nerve, and alveolar ridge measurements. Parallel to that, a human radiographer in the imaging center created a manual cross-sections report, that was attached to the 3D DICOM file.

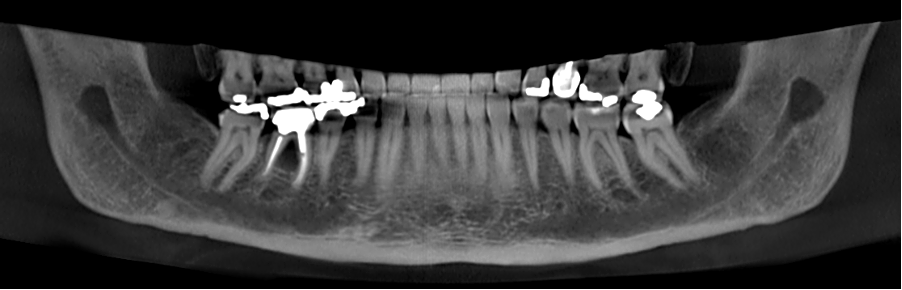

In the panoramic reformatted image produced as part of the AI-based cross-sections report, it seemed that the pathway of the inferior alveolar nerve forms a loop towards the mandibular foramen, close to the future transplant site. (Fig. 1A) It is unfortunate to mention that a human interpreter did not mark this loop, (Fig 1B) perhaps due to alternate positioning of the panoramic cross-section.

Fig. 1A

Fig. 1B

In light of this discrepancy, I ordered a rendering of the 3D volume to a digital STL model, in order to further appraise the distance between the mandibular foramen and the planned implant placement site. The cross-sectional measurements (Fig. 2) and the segmentation to a 3D model (Fig. 3) were carried out by the same artificial intelligence system (Diagnocat, Diagnocat Inc., USA). These made it possible to understand the exact location of the nerve, and to make sure that it was indeed out of range of danger in this case.

Fig. 2

Fig. 3A

Fig. 3B

Fig. 3C

Clinical Case No. 2

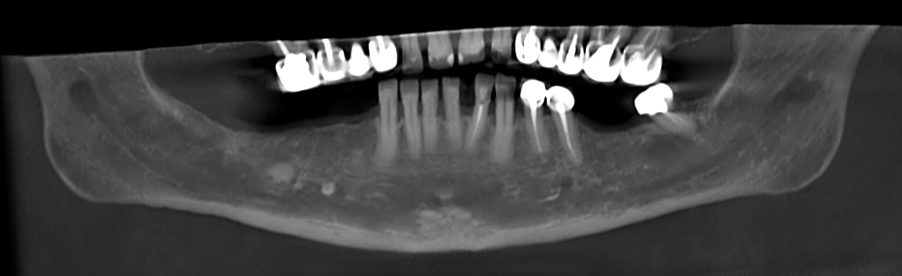

A 68-year old female patient interested in performing dental implants in the lower jaw came for an examination at the dental clinic and was subsequently referred to a CBCT scan. As part of the routine examination process in the clinic, the patient’s imaging files were uploaded to an artificial intelligence system (Diagnocat, Diagnocat Inc., USA) which indicated the presence of a lesion in the right posterior region of the lower jaw. (Fig. 4A-B) The mixed lesion, presenting a hyperdense core surrounded by hypodense shell, is most likely a fibro-osseous lesion, and in a joint consultation with an oral and maxillofacial surgeon, it was decided to leave it for follow-up at this stage. However, it was also decided that implant placement is possible mesial to the lesion.

Fig. 4A

Fig. 4B

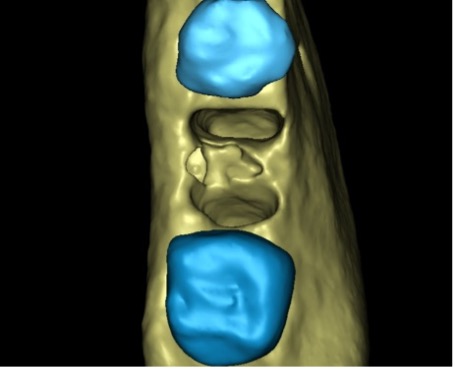

Nevertheless, the patient still wants implants to be placed, and for this purpose, a three-dimensional segmentation was carried out to further examine the ridge anatomy and the possibility of designing a surgical guide that will allow the installation of implants mesial to the mixed lesion, which is also well visible in the 3D model. (Fig. 5A)

Fig. 5A

The 3D model also makes it possible to clearly and sharply see the narrow shape of the patient’s residual ridge. (Fig. 5B-C) This information helped us plan the future treatment, as well as provide an exhaustive explanation to the patient and obtain informed consent for all stages of the procedure. (Fig. 6)

Fig. 5B

Fig. 5C

Fig. 6

Clinical Case No. 3

A 67-year-old female patient referred to our clinic in early 2019, complaining of pain in the right posterior region of the mandible. After a clinical examination that included X-rays in the clinic, she was referred to a CBCT scan of the lower jaw, for in-depth investigation of the a lesion between the roots of tooth 46. In Fig. 6, one may see the panoramic reformatting performed on her CBCT using artificial intelligence (Diagnocat, Diagnocat Inc., USA).

The AI also provided initial diagnoses, (Fig. 7) including, among other things, a radiolucent lesion in the area of the furcation. The AI-made cross-sections clearly demonstrated the lesion.

Fig. 7

In order to further clarify the matter, a multi-planar view was enabled, in which the lesion was also seen in all its glory. (Fig. 8)

Fig. 8

In the end, I decided to extract the tooth. To plan the treatment and prepare for further treatment steps, AI-based segmentation was ordered, and a three-dimensional model was created. (Fig. 9A)

Fig. 9

Now, we can correctly estimate the size of the bone defect created by furcation lesion, by “virtual extraction” of the tooth model, allowing us to look at the alveolar bone (Fig. 9B-C-D) from different angles – something that is not easily possible in 2D X-rays or classical viewers. Now we can see the shape of the residual alveolar bone before the extraction takes place!

Fig. 10A

Fig. 10B

Fig. 10C

Fig. 10D

In the end, and in light of the above imaging, I decided that in this case, the extraction will be followed by socket preservation. Four months later, an implant was placed, and after additional three months of healing time, allowing for osseointegration, it was loaded by a telescopic abutment (Abracadabra Implants Ltd., Israel), and a zirconia crown with temporary cement.

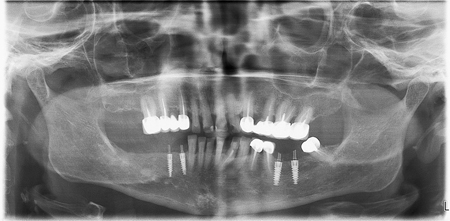

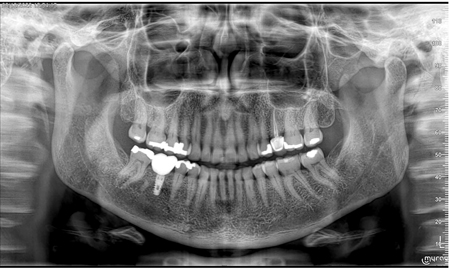

Due to the COVID-19 pandemic, the patient didn’t show up for follow-up in a while. However, when she finally came for follow-up in December 2022, a complete and excellent bone healing is evident around the implant and under the crown. (Fig. 11)

Fig. 11

Discussion, Summary, and Conclusions

In a paper from 2020, Kaan et al. examined the accuracy of periapical lesion volume measurements performed by artificial intelligence software compared to a human radiologist. One of the conclusions of the study, published in the International Endodontic Journal, was that the measurement carried out by AI was equivalently accurate to measurement performed by a human, and therefore can be used as a reliable substitute.

About a year later, Ezhov et al. published the results of a comprehensive study carried out by an international research group, which included senior dental and maxillofacial radiologists from several centers in Europe and the United States. The most important conclusion of this study was that a statistically significant difference is seen when comparing the accuracy of diagnoses given by dentists who used artificial intelligence versus those who did not, when examining the same clinical cases.

Therefore, while it is difficult to estimate when the AI would become mature enough to be able to complete the patient’s examination with high accuracy and without the need for the dentist to verify the diagnoses (and when would we be able to trust it to do so), it is clear that even today, the findings provided by the AI render it a clinically-significant and useful tool for every dentist – new graduates and seasoned specialists alike. The AI is a decision support system, providing various convenient tools, such as detailed review of findings, enhanced visualization, automated cross-sectional reports, and digital models created at the touch of a button – and each one of those, in turn, improves the accuracy of diagnosis and treatment. Artificial intelligence is no longer science fiction – it is a reality.

Oral Health welcomes this original article.

References

- Orhan K, Bayrakdar IS, Ezhov M, Kravtsov A, Özyürek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int Endod J. 2020 May;53(5):680-689. doi: 10.1111/iej.13265. Epub 2020 Feb 3. PMID: 31922612.

- Ezhov M, Gusarev M, Golitsyna M, Yates JM, Kushnerev E, Tamimi D, Aksoy S, Shumilov E, Sanders A, Orhan K. Clinically applicable artificial intelligence system for dental diagnosis with CBCT. Sci Rep. 2021 Jul 22;11(1):15006. doi: 10.1038/s41598-021-94093-9. Erratum in: Sci Rep. 2021 Nov 9;11(1):22217. PMID: 34294759; PMCID: PMC8298426.

About the Author

Vladislav (Vladi) Dvoyris is a general dental practitioner based in Tel Aviv, Israel. He is a Board Member of the Israel Association of Oral Implantology, a member of the Israel Society of Digital Dentistry and the Israel Society of Prosthodontics, and a Fellow of the International College of Dentists (ICD).